MLPerf Inference v3.0: Low-Power AI from Dell Servers with Qualcomm Cloud AI 100 Accelerators

July 2023

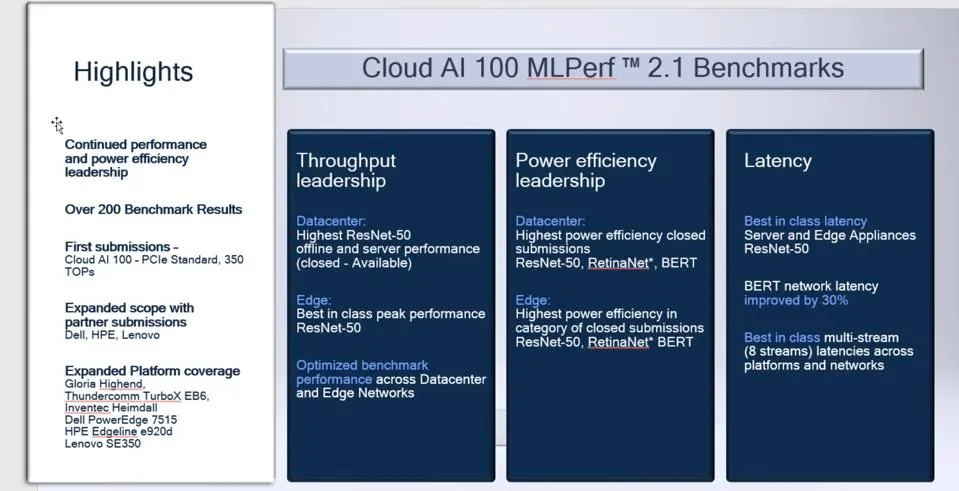

Details of the performance results of our work with Dell and Qualcomm for MLPerf Inference v3.0. In the datacenter category, the Dell R7515 server with four Qualcomm Cloud AI 100 Pro accelerators improves performance by up to 109 percent and power efficiency by up to 62 percent compared with the v2.1 submission.

Read more...

HiPEAC info: Accelerating Sustainability

July 2023

Can acceleration contribute towards sustainability goals? In this article, Natalie Durzynski and Dr Anton Lokhmotov (KRAI) explain the role that accelerated computing can play in efficiency improvements.

Read more...

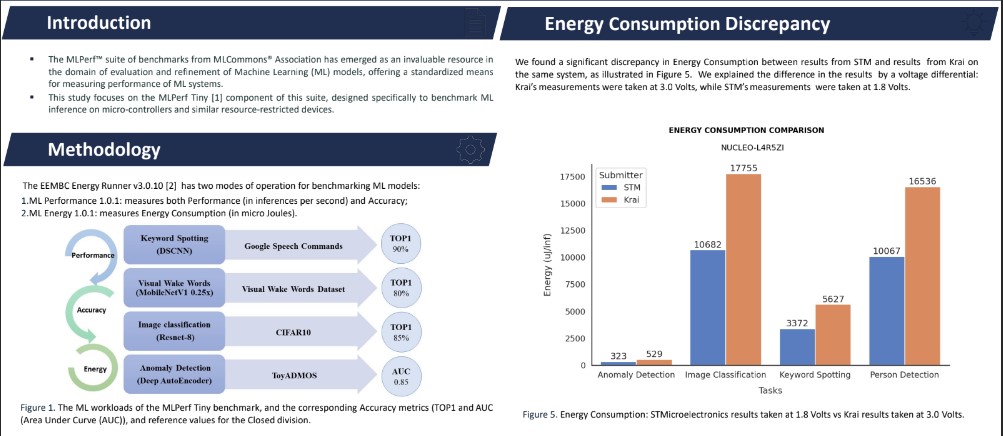

The MLPerfTM Tiny benchmark: Reproducing v1.0 results and providing new results for v1.1

July 2023

Description: KRAI's Saheli Bhattacharjee and Anton Lokhmotov present our first submission to MLPerf Tiny benchmarks including our unique findings.

Read more...

MLPerf Results Show Rapid AI Performance Gains

June 2023

Our team proudly delivered over a quarter of all submissions to MLPerf Tiny v1.1.

Read more...

Laying bare what's under the KILT

April 2023

With another successful round of submissions to MLCommons' MLPerf Inference done and dusted, our team at KRAI reflected on one of our most exciting achievements in this round, affectionately known as KILT.

Read more...

Qualcomm Cloud AI 100 continues to lead in power efficiency with the latest MLPerf v3.0 results

April 2023

Powered by our software technologies , Qualcomm's MLPerf Inference v3.0 submissions continue to demonstrate performance leadership and achieve highest power efficiency yet.

Read more...

Qualcomm Is Still The Most Efficient AI Accelerator For Image Processing

September 2022

Using MLPerf results that we obtained on behalf of Qualcomm, Forbes zoom in on how the Qualcomm Cloud AI 100 accelerator outshines NVIDIA GPUs for Computer Vision.

Read more...

Marching on with MLPerf, Breaking Some Records While Attempting New Ones

September 2022

Lenovo demonstrate a diverse portfolio of compute platforms for AI workloads, including results powered by our technologies.

Read more...

More choices to simplify the AI maze: Machine learning inference at the edge

August 2022

Optimized and delivered by KRAI, industry-leading MLPerf benchmarking results underpin the strong collaboration between HPE and Qualcomm.

Read more...

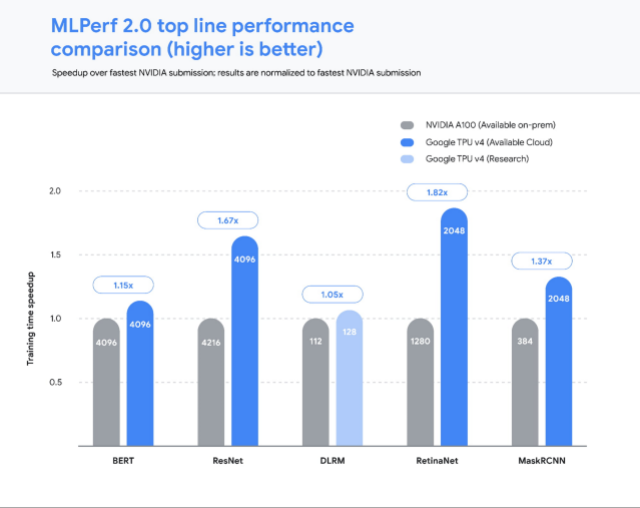

Google Celebrates MLPerf Training Wins

July 2022

Our team, as MLPerf inference veterans, submitted a ResNet training score for a system with two Nvidia RTX A5000 GPUs of 284.038 min. This entry–level option may be compared with one of Nvidia’s results for a two A30 system, which managed the training in 235.574 min, while the A5000s consumed 39% more power and were 20% slower, they are also 2–3× cheaper.

Read more...

HPE AI Inference solution featuring Qualcomm® Cloud AI 100

August 2022

Our MLPerf submissions on behalf of HPE deliver high performance and reduce latency associated with complex artificial intelligence and machine learning models.

Read more...

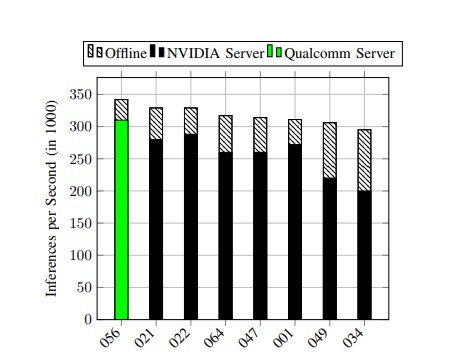

Scaling MLPerf™ Inference vision benchmarks with Qualcomm Cloud AI 100 accelerators

June 2022

We present how we solved the challenges we faced in achieving linear scaling for MLPerf™ Inference vision benchmarks on Datacenter and Edge servers equipped with Qualcomm Cloud AI 100 accelerators.

Read more...

Nvidia Dominates MLPerf Inference, Qualcomm also Shines, Where’s Everybody Else?

April 2022

The article highlights exceptional MLPerf Inference v2.0 results that we obtained on behalf of Qualcomm in the Datacenter and Edge categories.

Read more...

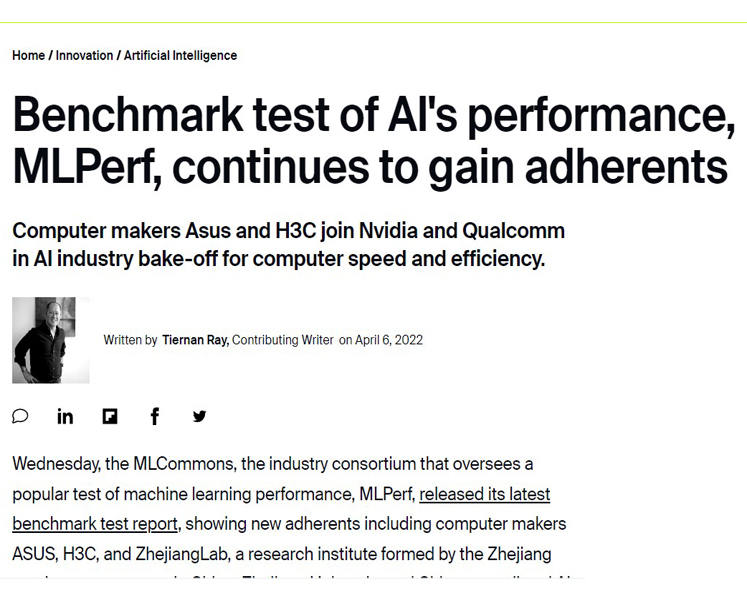

Benchmark test of AI's performance, MLPerf, continues to gain adherents

April 2022

Our MLPerf Inference v2.0 submissions are noted as being the most numerous and some of the best performing in MLPerf.

Read more...

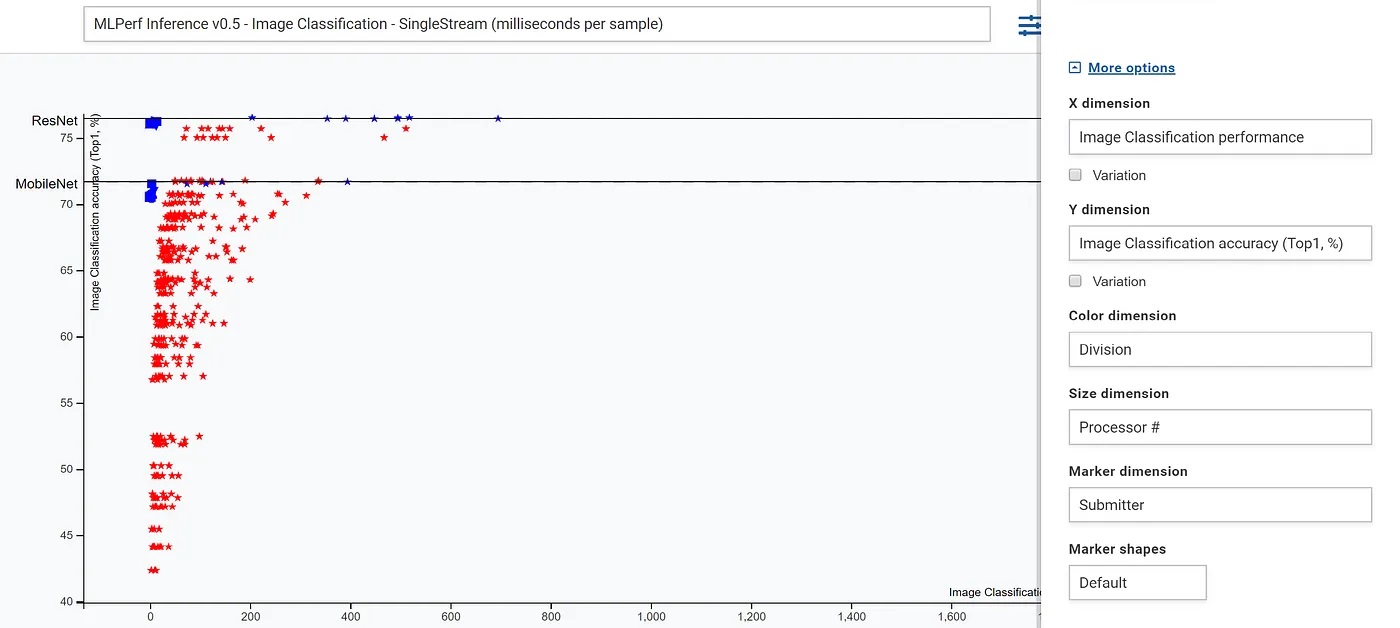

Demystifying MLPerf Inference

May 2020

Dr Anton Lokhmotov (KRAI CEO) explains the importance and benefits of MLPerf Inference benchmarks.

Read more...

Benchmarking as a product: a case for Machine Learning

April 2020

Dr Anton Lokhmotov (KRAI CEO) makes the case for industrial-grade benchmarking.

Read more...